HDR 101: What it is, why 10-bit and how to deliver it

There’s been a lot written in the media about HDR and its benefits. But CEDIA’s Director of Technical Content David Meyer takes a different approach to explain what HDR is, why is must reside in at least a 10-bit signal, and some considerations in delivering it to a compatible HDR display.

There’s been a lot written in the media about HDR and its benefits. But CEDIA’s Director of Technical Content David Meyer takes a different approach to explain what HDR is, why is must reside in at least a 10-bit signal, and some considerations in delivering it to a compatible HDR display.

High dynamic range (HDR) video seemingly came from nowhere to become the latest ‘must-have’ TV feature. But don’t think it’s another 3D-like gimmick. Joel Silver of ISF described HDR as “the most important thing to happen to video since the introduction of colour”. That’s huge.

So, what actually is HDR? We’ll get to that shortly. Understanding it and why we need it is one thing, but the greater challenge is how to get a reliable, complete HDR signal to a compatible video display. It seems for HDR we’ve found ourselves in the middle of another format war, but that’s further complicated by the rapidly changing face of how we access video content, and how we move it around the home.

ADVERTISEMENT

There are many considerations for signal integrity and transmission line capability for HDR video: HDMI, HDBaseT or optical fibre, compressed or uncompressed, etc? So where to begin…

How we see dynamic range

The luminance of a scene or display is measured in candela per square metre (cd/m2), or nits. The darkest, unpolluted, moonless night sky can measure as low as 0.0004 nits — barely enough to make out a few dark shapes, but not to see our way around, let alone any detail or colour. The other end of the scale is high noon on a bright sunny day, where our eyes’ limit of around 50,000 nits will see us diving for our sunglasses. This of course varies a bit between people, as will factors like how many drinks we had the night before, but you get the idea.

This absolute range equates to the best part of 30 stops. A ‘stop’ is a factor of two — each stop higher is a doubling of the quantity of light, each stop lower is half. That’s because our eyes are much more sensitive to changes in darker tones than they are to light. It’s not unlike octaves in sound, where we hear subtle changes in bass frequencies, declining as we move through mids and especially highs. Back to light, one stop below 50,000 nits is 25,000, then a stop lower again is 12,500, then 6,250 nits, and so on. 30 stops down we’re in a 0.00005 nit black hole.

The absolute range of our vision as mentioned above is possible because our eyes dilate or constrict, and this 30 stop range allows for full constriction to full dilation. The work of Peter Barton in the Netherlands in the late 1990s found that in any given scene — that is, at any set level of dilation — we can see somewhere between a 13 and 14 stop range, which equates to around 10,000:1 contrast ratio (calculated as just over 213:1). By comparison, the SDR video we’ve been delivered to date from TV, Blu-ray, OTT, etc is limited to 0.5-100 nits, being only eight stops, or around 250:1. Blah.

So the goal of HDR is to meet or exceed this 14 stop contrast sensitivity range of our eyes, and thereby bring more realism to video reproduction.

50 Shades of Grey

50 Shades of Grey

Nope, not the movie… oh, we’re so not going there. But shades of grey is inherent to digital video bit depth, which in turn brings tonal range to the colour gamut.

Smooth gradients are key to high performance video. Banding is the enemy, resulting in a video processor having to ‘dither’ the image to smooth things out. Dithering is the practice of using dots either clustered or spread out across the colour shade transitions to achieve a visible gradient effect, and it’s far more common than you may think.

We can’t detect banding, and therefore dithering wouldn’t be necessary, if video is presented with at least 50 shades per stop of light, hence my earlier 50 Shades reference. 8-bit video equals 256 shades (28) all up. At 50 shades per stop this means only five stops, not eight stops as the brightness range dictates. Either way it’s low, showing we’re at the end of the road for 8-bit as it’s barely sufficient for SDR.

For HDR we’re talking about extending the blacks down to an incredible 0.0005 nits, and (in time) as bright as 10,000 nits. That’s 24 stops! Together with the demands of wide colour gamut (WCG), 10-bit with its 1,024 shades (210) is the absolute minimum for HDR, and 12-bit is the optimum to get us the spectacular full range.

Static vs Dynamic HDR

Most ‘flavours’ of HDR, including HDR-10/10+ and Dolby Vision, rely on metadata in the signal to carry instructions to a display, so the display knows what to do. For the record, the ‘10’ in HDR-10 represents the minimum requirement for a 10-bit signal. I repeat, there is no 8-bit HDR.

An HDR movie on UHD Blu-ray is authored to a maximum brightness range. Early examples were authored to around 1,200 nits, but there’s now some up to 4,000 nits. Static HDR means the parameters are set at the beginning of a programme and don’t move. In practice this might mean a programme is set to the maximum brightness and work down from there. This in turn may limit the blacks, and with it, the detail in the darker scenes. It’s like fixing the dilation of our eyes, not allowing them to change for an entire movie. It’s unnatural.

Dynamic metadata can vary HDR parameters frame-by-frame. This means that the target 14-stop scene range can swing up and down the 24-stop absolute range mentioned above, providing the best possible effect and detail in dark or bright scenes in turn, delivering more realism.

HDR-10 uses static metadata, but HDR-10+ and Dolby Vision are both dynamic — we’ll circle back to this soon. HLG doesn’t need metadata (by the way, I’m not going into the HDR formats beyond this).

Delivering the HDR payload

Delivering the HDR payload

So, we’ve determined that HDR must at least be a 10-bit signal. Further to that, RGB/4:4:4 is uncompromising, but 4:2:0 is also totally compatible (and of course 4:2:2). So what does that mean for delivering the signal?

Consider the use cases for starters. There’s really not a lot of content yet, but that is growing daily. Right now it’s really for the main viewing screen/s in the home, rather than whole-home distribution. There’s no HDR on Foxtel, which leaves some UHD Blu-ray discs and over-the-top (OTT) streaming content such as Netflix. Some markets have 4K HDR (HLG) broadcasts, but not yet for Australia or New Zealand. There’s also a growing list of gaming titles with HDR, but they’re certainly not for multi-room distribution. Not yet anyway.

When it comes to OTT, the main bandwidth consideration is your internet connection, and whether it can support a 4K stream. Netflix, as the OTT leader, launched with a recommended 15Mbps, but these days they suggest a more sustainable, not to mention higher quality, 25Mbps or higher. That enables support for HDR too. Providing you have that (how’s your NBN?), the real challenge is getting the HDR metadata to the TV’s processor so that it renders in all its glory.

I’ve heard many reports of integrators not being able to get HDR from external devices to the display. It’s trial and error, and this is not the place to comment on specific products. The most reliable way to deliver true HDR — e.g. 4K Dolby Vision from Netflix to a supporting LG OLED TV — is to use the Netflix app in the TV. But that then compromises your ability to get the accompanying Dolby Atmos soundtrack from the display back to the AVR. Grrr!

The best thing to do is try combinations of external devices and displays to determine what works, or consult the CEDIA Community (community.cedia.net) to learn from peers’ experience. There’s certainly interoperability challenges, but I imagine this will get better as we gain technical maturity.

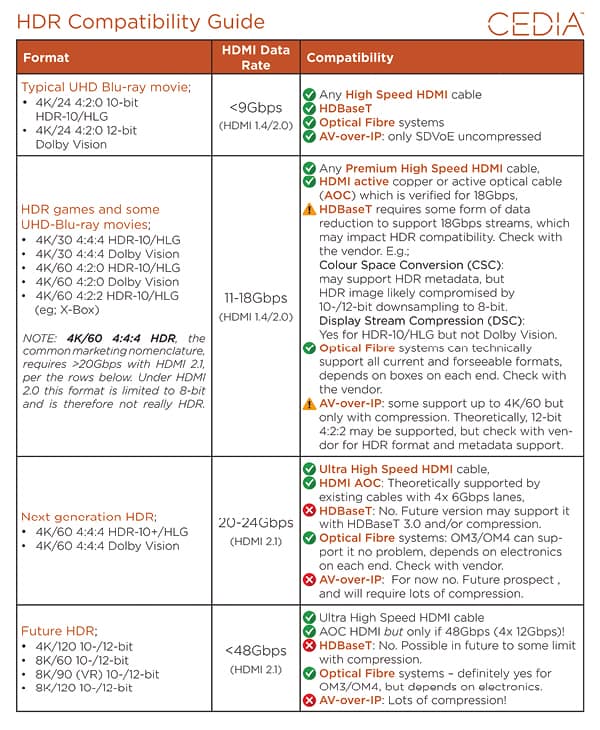

When we’re talking delivery and/or distribution of HDR content that’s already in the home, such as from a UHD Blu-ray, we need to consider the bandwidth and metadata support characteristics of the transport options. Table 1 lists the common HDR formats, and corresponding transport compatibility.

When we’re talking delivery and/or distribution of HDR content that’s already in the home, such as from a UHD Blu-ray, we need to consider the bandwidth and metadata support characteristics of the transport options. Table 1 lists the common HDR formats, and corresponding transport compatibility.

In summary, the goal of HDR is to meet or exceed the 14-stop contrast sensitivity range of human vision, and thereby bring more realism to video reproduction. This requires at least a 10-bit signal, compatible devices for the HDR format in question (that’s a whole other article, as is the big bag of colour gamut!), and of course having a supporting transport to get the signal there intact.

There is no 8-bit HDR. There’s nothing wrong with a device converting back to 8-bit to ensure an image is at least displayed, but it will no longer be HDR. With that in mind, happy integrating!

-

ADVERTISEMENT

-

ADVERTISEMENT

-

ADVERTISEMENT

-

ADVERTISEMENT